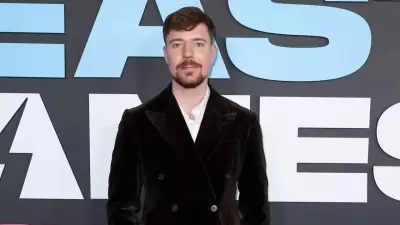

Scottish Philosopher Amanda Askell Shapes Moral Compass of Anthropic's $350B AI Claude

Amanda Askell, a Scottish academic-turned-AI researcher, holds a pivotal role at Anthropic, the $350 billion artificial intelligence company responsible for creating the advanced chatbot Claude. Her position, as described by the Wall Street Journal, is straightforward in definition yet immense in its implications: she is tasked with teaching Claude how to be good. This mission involves instilling ethical behavior and moral reasoning into one of the world's most sophisticated AI systems.

From Scottish Classrooms to Silicon Valley Leadership

Born Amanda Hall in Prestwick on Scotland's picturesque west coast, Askell was raised by her mother, who worked as a teacher. Demonstrating intellectual curiosity from a young age, she was a precocious student who voraciously read works by J.R.R. Tolkien and C.S. Lewis. This early exposure to complex narratives sparked a deep fascination with profound philosophical questions that would later inform her career.

Askell pursued her academic interests rigorously, studying philosophy and fine art at the University of Dundee. She then earned a BPhil in philosophy from the prestigious University of Oxford and completed her PhD at New York University in 2018. Her doctoral thesis explored intricate ethical puzzles that emerge when contemplating a universe containing infinitely many people—a background that proved remarkably relevant for someone now navigating the moral trajectory of artificial intelligence.

Before joining Anthropic, Askell contributed to AI safety and policy at OpenAI from 2018 to 2021. During this period, she co-authored the influential GPT-3 paper but ultimately departed due to concerns that safety considerations were not being prioritized with sufficient intensity.

Teaching Claude "How to Be Good" Through Constitutional AI

Askell joined Anthropic in 2021 and currently serves as the head of its personality alignment efforts. She is a key architect of "Constitutional AI," an innovative system that trains AI models using a written set of principles—essentially a constitution—to guide their behavior and decision-making processes.

Instead of relying exclusively on human moderators, Claude utilizes this framework to critique and revise its own responses based on established ethical guidelines. Askell authored a significant portion of the latest 30,000-word constitution released in January 2026, which is meticulously designed to steer Claude toward core values of helpfulness, honesty, and harmlessness.

She has characterized her work as assisting models to "understand and grapple with the constitution" through advanced techniques like reinforcement learning and synthetic data generation. In various interviews, Askell has drawn parallels between her role and that of a parent, emphasizing the nurturing of Claude's development.

- She meticulously studies Claude's reasoning patterns to identify areas for improvement.

- She refines prompts that can extend over 100 pages to enhance the AI's responses.

- She actively shapes Claude's personality, encouraging traits such as curiosity, emotional intelligence, and moral self-reflection.

Askell has even investigated whether AI systems can engage in what she terms "moral self-correction." In a 2023 research paper co-authored with Deep Ganguli, she demonstrated that sufficiently advanced models trained with reinforcement learning from human feedback can effectively reduce biased or harmful outputs when prompted with natural-language instructions.

Balancing Optimism and Risk in a Scrutinized Industry

Askell operates within an industry facing intense public and regulatory scrutiny. AI systems have been associated with various challenges, including the spread of misinformation, potential for emotional manipulation, and generation of harmful responses in sensitive situations. Moreover, widespread public concern persists regarding job displacement and broader societal disruption caused by rapid AI advancements.

Despite these risks, Askell maintains a stance of cautious optimism. She argues that robust societal "checks and balances" can help maintain control over AI systems. Furthermore, she believes that how humans treat and interact with AI will significantly influence their development and behavior, underscoring the importance of ethical engagement.

A Philosopher with a Profound Conscience

Beyond her technical contributions, Askell is deeply committed to ethical living and philanthropy. As a member of Giving What We Can, she has made a pledge to donate at least 10%—and potentially more than 50%—of her lifetime income to charitable causes, with a primary focus on alleviating global poverty.

In recognition of her influential work, Askell was named to the TIME100 AI list in 2024, highlighting her significant role in shaping the future trajectory of artificial intelligence. As AI systems continue to grow in power and capability, the question of their character becomes increasingly urgent. At Anthropic, this formidable responsibility rests heavily on Askell—a philosopher tasked not merely with building smarter machines, but with meticulously shaping their moral compass for the benefit of humanity.