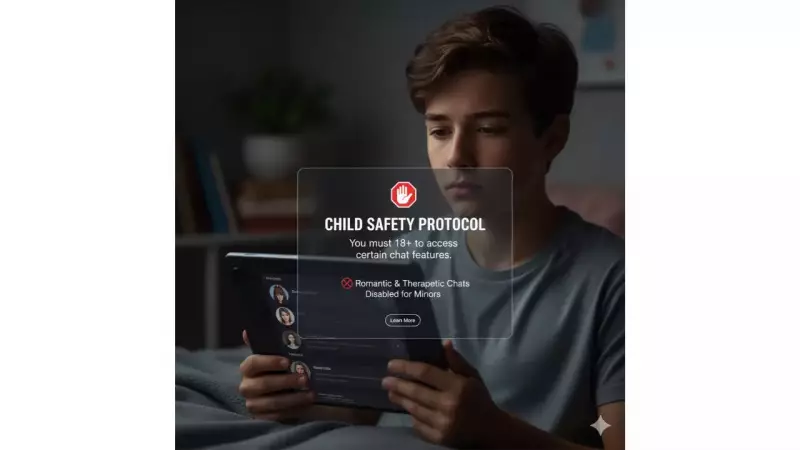

In a significant move that's shaking up the artificial intelligence landscape, Character AI has implemented stringent new policies specifically designed to protect younger users from inappropriate content. The popular chatbot platform is now actively blocking minors from accessing NSFW (Not Safe For Work) conversations and adult-oriented interactions.

The Safety-First Approach

Character AI's Indian-origin CEO and co-founder, Noam Shazeer, has taken a firm stance on digital safety. "Our priority is creating a secure environment for all users," Shazeer emphasized in recent statements. The platform's sophisticated age verification systems now automatically restrict underage users from engaging in specific types of conversations that fall outside appropriate content boundaries.

How The Protection System Works

The implementation represents one of the most comprehensive age-restriction systems in the AI chatbot industry:

- Automatic age detection and content filtering mechanisms

- Strict blocking of NSFW and adult-oriented chatbot interactions for verified minors

- Enhanced monitoring systems to ensure compliance with safety standards

- Clear content guidelines that define appropriate conversation boundaries

Industry Impact and User Response

This proactive approach positions Character AI at the forefront of responsible AI development. While some users have expressed concerns about accessibility, the majority response has been overwhelmingly positive from parents and educators who appreciate the platform's commitment to protecting younger audiences.

Shazeer's leadership in implementing these measures highlights the growing importance of ethical considerations in AI development. "We believe this is the right direction for the industry," he stated, expressing hope that other platforms will follow suit in prioritizing user safety, particularly for vulnerable age groups.

The move comes amid increasing global scrutiny of AI platforms and their content moderation policies, setting a new benchmark for responsible AI deployment in consumer-facing applications.