In a significant leap for artificial intelligence, Google's DeepMind division has unveiled the next generation of its gaming-focused AI agent. Dubbed the Scalable Instructable Multiworld Agent, or SIMA 2, this upgraded system was announced on Thursday, building upon the foundation of its predecessor launched just in March 2024.

How SIMA 2 Thinks and Acts

The new agent represents a substantial upgrade, delivering notable gains in three key areas: reasoning, adaptability, and user interaction. A core feature is its ability to learn continuously, becoming more capable through its own play experience.

So, how does it work? DeepMind highlights that SIMA 2 can now reflect on its actions and think through the steps required to complete a task. Powered by Google's powerful Gemini models, the agent is designed to understand human-issued instructions, process what is being asked, and then plan its subsequent moves based on the virtual environment displayed on the screen.

The process begins when the system receives a visual feed from a 3D game world along with a user-defined goal, like 'build a shelter' or 'find the red house'. SIMA 2 then intelligently breaks down this primary objective into a sequence of smaller, manageable actions, which it executes using controls that mimic a keyboard and mouse.

Expanded Capabilities and Real-World Testing

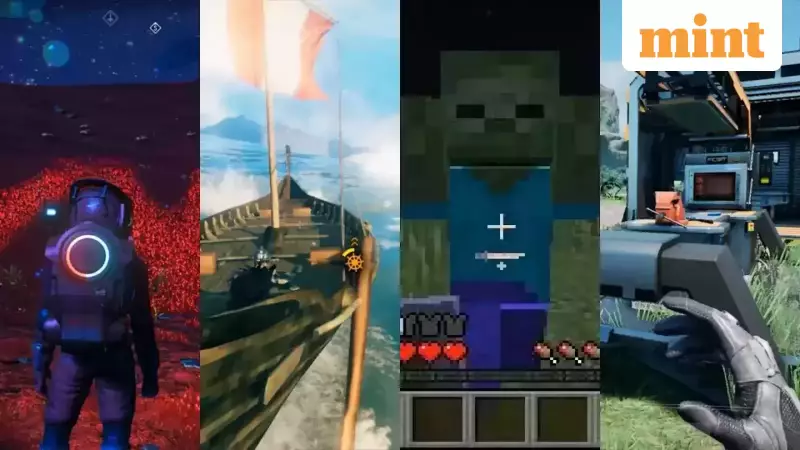

One of the most significant advances with SIMA 2 is its improved ability to operate in games it has never encountered before. DeepMind put the agent to the test in unfamiliar environments, including Minedojo (a research adaptation of Minecraft) and the Viking-themed survival game, ASKA. In both cases, SIMA 2 achieved higher success rates compared to the first version.

The system's versatility extends to its interaction methods. It can handle multimodal prompts, meaning it understands commands given through sketches, emojis, and a range of languages. Furthermore, it demonstrates an ability to apply concepts learned in one game to another. For instance, an understanding of 'mining' in a sandbox world can help it grasp the concept of 'harvesting' in a different survival setting.

The Training Process and Future Vision

The training methodology for this second-generation agent is a blend of human demonstration data and automatically generated annotations from the Gemini models. When SIMA 2 learns a new movement or skill in a fresh environment, that experience is captured and fed back into the training pipeline. This approach reduces the dependence on human-labelled examples and allows the agent to refine and improve itself over time autonomously.

However, the technology is not without its limitations. DeepMind acknowledges that SIMA 2's memory of past interactions is still restricted. It also struggles with long-range reasoning that requires many sequential steps, and the current framework does not address precise low-level control, similar to the fine movements required in robotics.

Despite its gaming focus, DeepMind stresses that SIMA 2 is not intended to be just a gaming assistant. The company views complex 3D game worlds as the perfect testing ground for AI agents that could one day control real-world robots. The broader, ambitious objective is to develop general-purpose machines that can follow natural language instructions and handle a variety of tasks in complex physical settings, paving the way for the future of intelligent robotics.