Hyderabad Researchers Develop AI Method for Digital Heritage Restoration

Researchers based in Hyderabad have created an artificial intelligence-driven approach to digitally restore damaged historical artefacts. This innovative method allows for the restoration of murals, pottery, sculptures, and manuscripts without any physical contact with the fragile objects. Museums and conservation teams now have a non-invasive option for preserving heritage items.

Research Published in Scientific Reports

The research paper titled Automated Image Inpainting for Historical Artifact Restoration using Hybridisation of Transfer Learning with Deep Generative Models appeared in Nature's Scientific Reports. Baggam Swathi, a research scholar in the computer science and engineering department at Malla Reddy University, Hyderabad, led the work alongside B Jagannadha Rao, an associate professor at the same institution.

Moving Beyond Traditional Restoration Methods

Historical artefacts frequently lose detail over time due to weathering, aging, environmental exposure, and handling. The authors note that physical restoration processes are typically labor-intensive and slow. These methods can also be vulnerable to human error. Digital restoration presents a contrasting advantage. It can be reversible, enabling reconstruction without altering the original object.

The study identifies a specific problem with conventional image inpainting techniques. Many patch-based or diffusion-style approaches struggle to retain an artefact's unique aesthetic character. This challenge becomes particularly sharp when working with objects featuring distinctive textures and patterns. The goal is to reconstruct missing sections while keeping the visual integrity of the original completely intact.

A Hybrid Deep Learning Solution

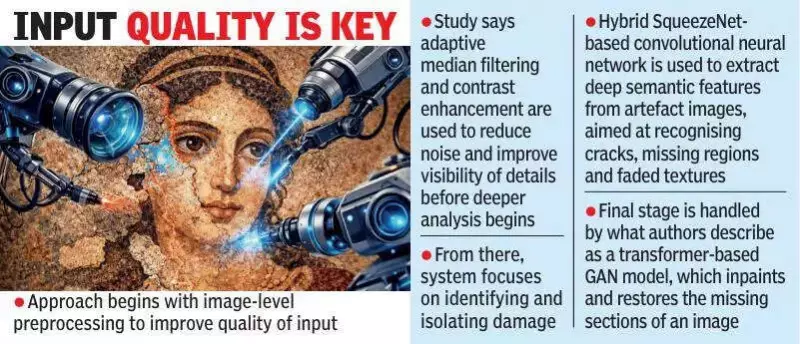

To address these challenges, the research team developed a hybrid deep learning-enabled inpainting model. They named this system HDLIP-SHAR. The approach begins with image-level preprocessing to improve input quality. The study details the use of adaptive median filtering and contrast enhancement. These steps reduce noise and improve the visibility of details before deeper analysis commences.

The system then focuses on identifying and isolating damage. A hybrid SqueezeNet-based convolutional neural network extracts deep semantic features from artefact images. This process aims to recognize cracks, missing regions, and faded textures. The final restoration stage is handled by a transformer-based GAN model. This component inpaints and restores the missing sections of an image.

Recognizing Practical Constraints

The researchers also outline several constraints for their method. The approach depends heavily on the availability of high-quality reference images. This requirement can be a limiting factor in many real-world conservation settings. They further note that restoration accuracy can decrease when dealing with severely degraded artefacts.

Another practical barrier involves computation. Processing very large or high-resolution images can be resource-intensive. This raises important questions about the system's scalability for widespread use in museums and conservation labs.

This development from Hyderabad represents a significant step forward in heritage conservation technology. It offers a promising digital tool for preserving the past without risking damage to invaluable historical objects.