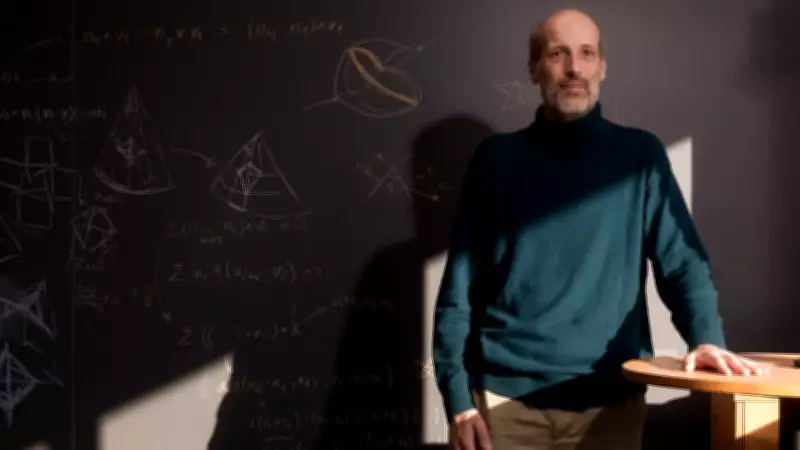

Mathematicians Develop Rigorous Tests to Evaluate AI's Logical Capabilities

In a significant move to assess the true intelligence of artificial intelligence systems, a team of mathematicians and computer scientists has introduced a new suite of benchmark tests designed specifically to probe AI's mathematical reasoning abilities. This initiative aims to move beyond traditional performance metrics and delve into the core logical and problem-solving skills that underpin genuine cognitive function.

Beyond Standard Benchmarks: Focusing on Mathematical Reasoning

The researchers argue that current AI evaluations often rely on datasets that AI models can memorize or pattern-match, failing to test deeper understanding. Their new benchmarks present mathematical problems that require step-by-step logical deduction, abstraction, and the application of fundamental principles. These tests are crafted to be novel and complex, ensuring that AI systems cannot simply regurgitate learned solutions but must demonstrate adaptable reasoning.

Key findings from initial testing have highlighted several critical areas where AI falls short:

- Logical Consistency: AI models frequently produce answers that are mathematically inconsistent or contain hidden contradictions, even when responses appear correct on the surface.

- Generalization Issues: Systems trained on specific problem types struggle to apply similar reasoning to slightly altered or entirely new mathematical scenarios, indicating a lack of true comprehension.

- Over-reliance on Training Data: Many AI performances degrade significantly when faced with problems outside their training distribution, suggesting limitations in autonomous problem-solving.

Implications for AI Development and Safety

This research carries profound implications for the future of AI development. By identifying weaknesses in logical reasoning, mathematicians provide a roadmap for creating more robust and reliable AI systems. The benchmarks serve as a crucial tool for developers to refine algorithms, ensuring that AI can handle real-world tasks requiring precise and consistent logical thought, such as in scientific research, engineering, and critical decision-making processes.

Moreover, the study underscores the importance of interdisciplinary collaboration. Combining expertise from mathematics, computer science, and cognitive science is essential to advance AI beyond narrow capabilities toward more general intelligence. The researchers emphasize that ongoing testing and refinement of these benchmarks will be vital as AI technology evolves, helping to mitigate risks associated with deploying AI in high-stakes environments.

In summary, this initiative represents a pivotal step in the quest to build truly intelligent machines. By rigorously testing AI's mathematical prowess, mathematicians are not only exposing current limitations but also paving the way for innovations that could lead to more trustworthy and capable artificial intelligence systems in the years to come.