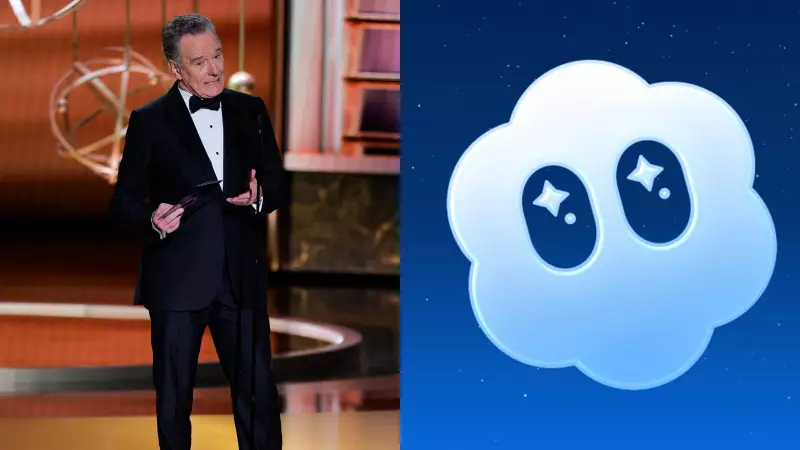

In a significant move that's shaking the artificial intelligence landscape, OpenAI has implemented sweeping new restrictions on its groundbreaking Sora video generation technology. The company has officially banned the creation of celebrity deepfakes and other unauthorized likenesses following a security incident involving a malicious actor.

The Breaking Point: Security Breach Forces Action

The policy change comes after what OpenAI describes as a "bad actor" attempting to exploit Sora's capabilities for creating unauthorized celebrity content. This security breach served as a wake-up call for the AI giant, highlighting the urgent need for stronger safeguards around their revolutionary video generation technology.

OpenAI's new usage policy now explicitly prohibits:

- Generating videos of real people, including celebrities

- Creating content featuring other individuals without their consent

- Producing sexually explicit material

- Generating extreme violence or hateful content

- Any content that violates trademarks or intellectual property

Understanding the AI 'Slop' Phenomenon

The term "AI slop" has emerged as a popular descriptor for low-quality, mass-produced AI content that floods digital platforms. Much like email spam in the early internet days, AI slop represents the darker side of accessible artificial intelligence tools - content created without regard for quality, accuracy, or ethical considerations.

"We're seeing the emergence of what some are calling 'AI slop' - content generated primarily to capture attention rather than provide value," explained an industry analyst familiar with the situation.

Sora's Revolutionary Capabilities Meet Real-World Constraints

When OpenAI first unveiled Sora earlier this year, the technology stunned observers with its ability to generate remarkably realistic video content from simple text prompts. The AI model can create complex scenes with multiple characters, specific types of motion, and accurate details of subjects and backgrounds.

However, this very capability raised immediate concerns about potential misuse. The technology's sophistication in rendering human likenesses and realistic scenarios made it particularly vulnerable to exploitation for creating convincing deepfakes and unauthorized celebrity content.

The Broader Impact on AI Industry Standards

OpenAI's decisive action represents a significant moment for the entire artificial intelligence industry. As one of the sector's leading innovators, their policy decisions often set precedents that other companies follow.

Industry experts note several key implications:

- Increased scrutiny on AI video generation tools across the board

- Growing pressure for competitors to implement similar restrictions

- Enhanced focus on digital authentication and content verification

- Accelerated development of AI detection technologies

What This Means for Content Creators and Users

For legitimate users of Sora and similar AI tools, these new restrictions provide clearer guidelines for ethical content creation. The rules aim to strike a balance between innovation and responsibility, allowing creative professionals to leverage AI capabilities while preventing harmful applications.

"This isn't about limiting creativity," a spokesperson emphasized. "It's about ensuring that powerful technologies develop in ways that respect individual rights and promote positive applications."

The company has indicated they're developing more sophisticated content verification systems and working on technical solutions to prevent policy violations before they occur.

The Future of AI Content Moderation

OpenAI's proactive stance signals a new phase in AI development where ethical considerations and security measures are becoming integral to technology deployment rather than afterthoughts. As AI capabilities continue to advance at a breathtaking pace, the industry faces increasing pressure to implement robust safeguards.

The Sora incident and subsequent policy changes highlight the ongoing challenge facing AI developers: how to harness transformative technology while preventing its misuse in an increasingly digital world.