Politics

India-China Thaw in 2025: A Fragile Reset Driven by Compulsion, Not Trust

Five years after Galwan, India and China resume talks & trade. But the 2025 thaw is a tactical detente, not trust. Exports rise 90%, yet deficit nears $106bn. Can this fragile calm last?

World

Maduro's 2026 New Year Address: Venezuela 'Unstoppable', Cites Military-Police Fusion

Venezuela's President Maduro declares 2025 a year of 'national consensus', highlights security and anti-imperialist stance in New Year address. Read the full story.

Business

Sensex, Nifty Poised for Record Opening on Jan 1, 2026; Gift Nifty at 26,341

Indian stock markets are set to open at record highs on the first trading day of 2026, driven by positive global cues and strong domestic sentiment. Key factors include Gift Nifty trends, Asian market performance, and commodity price movements.

Entertainment

Dwyane Wade's Intimate Black & White Photo With Gabrielle Union Captivates Fans

NBA legend Dwyane Wade shares a simple yet powerful black-and-white photo with wife Gabrielle Union on Instagram, reminding fans that genuine moments matter most. See the heartwarming snapshot.

Sports

Lifestyle

Health

Indore Water Contamination: Officials Face Action, Deaths Rise

Severe water contamination in Indore leads to multiple deaths. Two officials suspended, one dismissed. Authorities scramble to contain the crisis. Read for latest updates.

Best Parenting Advice: Welcome Feelings, Guide Behaviours

Developmental psychologist Dr. Aliza Pressman shares transformative parenting advice: validate all feelings but set clear behaviour limits. Learn how this principle builds emotionally healthy children.

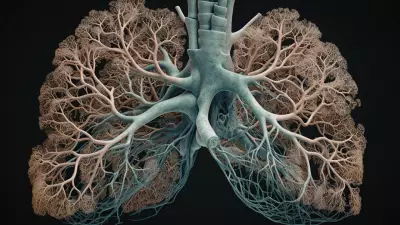

Delhi man beats asthma with yoga and traditional therapy

Kamal Kumar Singhal, 44, from Delhi, overcame chronic asthma through a dedicated yoga regimen and a personalized wellness program at Patanjali. Discover his transformative journey to health.

Manipal Sarjapur Opens Dedicated Deep Brain Stimulation Clinic

Manipal Hospital Sarjapur Road launches a dedicated Deep Brain Stimulation (DBS) clinic for advanced treatment of Parkinson's, OCD & depression. Expert care now in Bangalore.

Why Amla is Your Essential Winter Superfood

Discover why Amla (Indian gooseberry) is a winter essential. Packed with Vitamin C, it boosts immunity, fights colds, aids digestion, and keeps skin glowing. Learn how to include it in your daily diet!

Technology

Get Updates

Subscribe to our newsletter to receive the latest updates in your inbox!

We hate spammers and never send spam