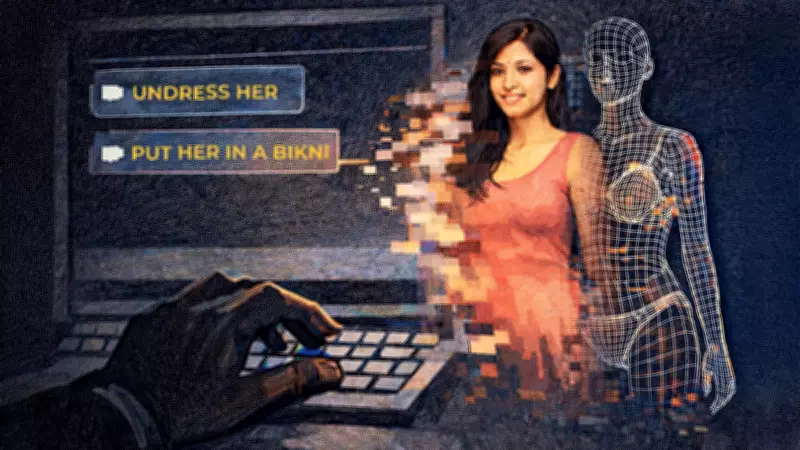

A chilling new form of digital extortion is sweeping across India, powered by artificial intelligence applications that can undress photographs. These so-called nudify apps are creating a dangerous wave of cyber blackmail that is leaving families terrified and authorities scrambling for solutions.

The Ghaziabad Nightmare: A Family's Harrowing Experience

The disturbing reality of this technological threat became painfully clear for one Ghaziabad family last year. Ramesh Verma (name changed), a 41-year-old resident, was enjoying a quiet dinner with his wife Anjali in their apartment when their peaceful evening was shattered by an unexpected phone call.

"The man on the line spoke with chilling casualness," Verma recalls, his voice still carrying the trauma of that moment. "He simply stated, 'I have bad photos of you and your wife.' I initially dismissed it as another scam attempt and disconnected the call."

Persistent Threats and Psychological Torment

What followed was a campaign of psychological harassment that few could have anticipated. The calls continued relentlessly, each one more threatening than the last. The perpetrators had apparently used AI technology to create compromising images, though Verma and his wife had never taken or shared such photographs.

This case represents just one of many similar incidents being reported across urban India, where sophisticated AI tools are being weaponized for extortion. The technology behind these nudify applications has become increasingly accessible, allowing even those with minimal technical skills to create convincing fake nude images from ordinary photographs.

Law enforcement agencies are facing unprecedented challenges in combating this new form of cybercrime. The digital nature of the evidence, combined with the psychological impact on victims, creates complex investigation scenarios that traditional policing methods struggle to address effectively.