Artificial Intelligence is making a bold and controversial entry into the realm of intimacy, but experts warn it may not be the solution to the growing loneliness crisis. While designed to simulate care and support, artificial intimacy becomes perilous when it transitions from aiding human connections to supplanting them entirely.

The Historical Context of Technological Adoption

Societies have a long history of embracing new technologies without fully grasping their long-term consequences. In the past, smoking was once celebrated as a sophisticated lifestyle choice before its severe health risks came to light. Similarly, cocaine was marketed in the 19th century as a medicinal and stimulant product, endorsed by figures like Sigmund Freud and featured in popular culture, such as in Sherlock Holmes stories.

More recently, social media platforms were initially hailed as revolutionary tools for connecting people globally. However, over time, evidence emerged linking them to addiction, anxiety, and significant harm, particularly among young users. Countries like Australia have responded by banning social media for children, with nations such as Indonesia considering similar measures, but these actions often come too late, after generations have already suffered negative impacts.

The Loneliness Crisis and Economy

At the heart of the rise of artificial intimacy lies a profound loneliness crisis. Governments in the United States and the United Kingdom have officially recognised loneliness as a critical public health issue, associated with long-term health problems. The loneliness economy has been expanding for decades, evolving from phone-a-friend services and late-night television call-in shows to modern chat lines and video cam platforms.

In India, the startup ecosystem focused on addressing loneliness is reportedly valued at over $1 billion. The post-pandemic world has only accelerated this trend, with AI now supercharging the sector by offering infinite, personalised, and always-on emotional availability. This eliminates previous limitations like human time, labour, and boundaries, creating an unprecedented supply of artificial companionship.

The Emergence of AI Companions

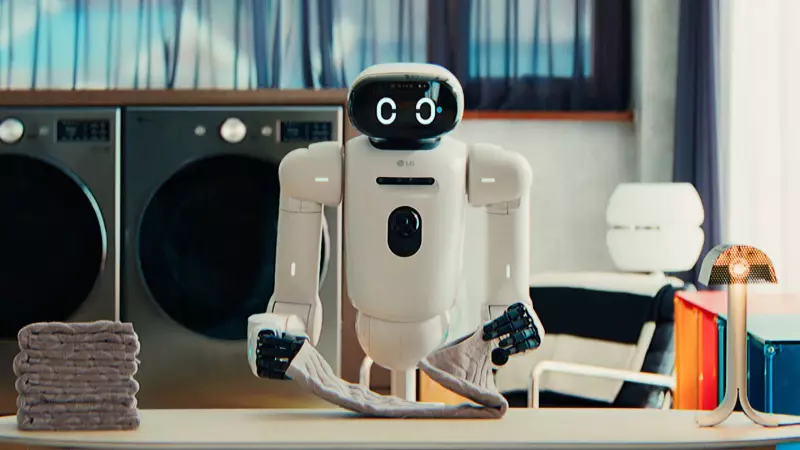

AI companions are engineered to mimic care, empathy, affirmation, and emotional support. They engage in role-play, provide rapid responses, and remain constantly accessible. For many individuals, including teenagers, vulnerable populations, and the elderly, these systems serve as an escape from loneliness, functioning more as lifelines than mere software tools.

Unlike human intimacy, artificial intimacy knows no bounds. It does not experience fatigue, impatience, distraction, or withdrawal. Instead, it validates, mirrors, and reinforces emotional cues, optimised for continuous engagement. While this can offer genuine benefits—such as comfort for those who are isolated, grieving, disabled, or socially anxious—it also harbors significant risks.

The Dangers of Replacing Human Connection

Artificial intimacy becomes dangerous when it shifts from supporting human connection to replacing it. Handing over emotional regulation and validation to bots that lack moral responsibility, accountability, and are driven by commercial motives to maximise engagement can lead to harm. Warning signs are already emerging, as seen in lawsuits involving platforms like Character.AI in the US, where vulnerable users formed intense emotional attachments to AI personas, sometimes resulting in increased dependence and tragic outcomes like self-harm and suicide.

Just as nicotine alters reward pathways in the brain, artificial intimacy can reshape how individuals experience attachment, rejection, conflict, and self-worth. It trains users to expect constant reassurance without the need for negotiation, disappointment, or compromise. Consequently, real relationships, with their complexities and emotional mismatches, may start to feel draining, unpredictable, and unsatisfying, potentially leading to long-term emotional dependence on chatbots and difficulties in handling real-world intimacy.

Stakeholder Responses and Regulatory Challenges

In response to growing concerns, some responsible AI developers have implemented safeguards. These include interventions during chats, breaking character in discussions about self-harm, limiting long-term memory, and reminding users that they are interacting with machines. Some have restricted romantic role-play or enhanced protections for younger users.

However, these measures remain inconsistent, voluntary, and reactive. Most providers in the artificial intimacy sector are not incentivised to adopt such guardrails unless faced with litigation risks. If profit becomes the sole motive, there is a danger of dark patterns emerging that manipulate user behaviour, leading to emotional subscription traps and broader societal risks, including national security threats and large-scale emotional manipulation for indoctrination or radicalisation.

The Role of Society and Government

While developers and deployers react to this evolving situation, society plays a crucial role. Stakeholders such as parents, caregivers, schools, and mental health professionals must identify early warning signs, intervene proactively, and spread awareness about the harms of artificial intimacy. Digital literacy efforts should emphasise that responsiveness does not equate to care, and simulation is not reciprocity.

Early drafts of India's data protection framework acknowledged emotional manipulation as a form of harm, though this was later removed. The government should consider regulating dark patterns, including those that create emotional dependence on artificial companionship, and hold service providers accountable for implementing safeguards to prevent harmful emotional manipulation. Encouraging age-appropriate safety design principles can help ensure that artificial intimacy serves as a supplement rather than a substitute for human relationships.

Conclusion: A Call to Action

AI has the potential to genuinely assist individuals by helping them practice interactions, cope with temporary loneliness, and access support while preserving the primacy of human connections. It can also supplement therapy in supervised environments. History shows that when societies act early, harm can be mitigated; delays often lead to damage becoming the norm. Artificial intimacy may represent the new smoking—it is imperative for stakeholders to act decisively now to avoid severe consequences later.